Few would dispute the claim that a nation�s science and technology (S&T) base is a critical element of its economic strength, political stature, and cultural vitality. In recent years, efforts to evaluate and assess research activity have increased. Government policy-makers, corporate research managers, and university administrators need valid and reliable S&T indicators for a variety of purposes: for example, to measure the effectiveness of research expenditures, identify areas of strength and excellence, set priorities for strategic planning, monitor performance relative to peers and competitors, and target emerging specialties and new technologies for accelerated development.

One of the many quantitative indicators available for S&T evaluation and assessment is the published research literature, that is, primary research journal articles. Publication counts have traditionally been used as indicators of the �productivity� of nations, corporations and institutions, departments, and individuals. However, judgment of the influence, significance, or importance of research publications requires the qualitative analysis by experts in the field, an often time-consuming and expensive process.

The advent of citation databases, which track how often papers are referenced in subsequent publications, and by whom, has created new tools for indicating the impact of primary research papers. By aggregating citation data, it is then possible to indicate the relative impact of individuals, journals, departments, institutions and nations. In addition, citation data can be used to identify emerging specialties, new technologies and even the structure of various research disciplines, fields, and science as a whole.

This is not to say that citation data replace or obviate the need for qualitative analysis by experts in the field. Rather, they supplement expert judgments by providing a unique perspective on the S&T enterprise. Indeed, citation data themselves require careful and balanced interpretation to contribute most effectively to S&T evaluation and assessment.

Citation databases of ISI

The Institute for Scientific Information�s (ISI) Science Citation Index (SCI) was developed primarily for the purpose of information retrieval. However, its quantitative citation databases are especially well-suited for application as S&T indicators for a number of reasons. For example, they are multidisciplinary, representing virtually all fields of science and the social sciences. Thus, ISI�s databases can accommodate S&T analyses whose scope ranges from the narrowest focus on a particular subspecialty to the broadest perspective on science as a whole.

Also, ISI�s databases are comprehensive, indexing all types of items that a journal publishes. These include not only original research papers, review articles, and technical notes but also letters, corrections and retractions, editorials, news features, and so on. ISI studies have shown that these items are significant means of scholarly communication.[1] Thus, the S&T analyst has great flexibility in choosing which types of items to include in an evaluation.

In addition, ISI fully indexes these items, including all authors� names, institutional affiliations and addresses, article titles, journal, volume, issue, year and pages. This enables S&T analyses of individual researchers, institutions and departments, cities or states or nations, journals, established and emerging specialties, and so on.

As noted earlier, ISI indexes not only all journal source items but also all the references they cite. This provides the basis for developing a variety quantitative S&T indicators, not just output or productivity (number of papers) but also �impact� (average number of citations per paper, journal, author, institution, and so on), �citedness� (percent of total publication output that was later cited), and so on.

At present, ISI�s databases include about 15,000,000 papers published since 1945 and more than 200,000,000 references they cited. This offers the potential for extended time-series analyses of S&T trends to policy-makers, administrators, and managers as well as historians, sociologists, and information scientists.

The following sections illustrate the variety of analyses at different levels of specificity � from individual authors to entire nations � that are possible using citation data. The examples are taken from Science Watch, a monthly ISI newsletter reporting on citation-based trends and developments.[2]

Most-cited authors

Over the years, ISI has published several studies identifying the most-cited authors in various fields and covering different time periods. It should be noted that authors in larger fields achieve higher citation rates. Thus, undifferentiated citation rankings tend to be dominated by molecular biologists, geneticists, biochemists, and other life scientists while fewer authors in physics and chemistry, for example, are represented.

Table

1 identifies 28 authors who received more than 12,000 citations to papers

indexed in the 1980-1991 SCI. It is interesting to note that five authors

(18%) are Nobel Prize winners. In fact, this and previous most-cited author

rankings have been shown to effectively identify groups or sets of authors

�of Nobel class�.[3] That is, not only are actual

Nobelists identified, but authors who later go on to win the prize are

also included.

Table

1 identifies 28 authors who received more than 12,000 citations to papers

indexed in the 1980-1991 SCI. It is interesting to note that five authors

(18%) are Nobel Prize winners. In fact, this and previous most-cited author

rankings have been shown to effectively identify groups or sets of authors

�of Nobel class�.[3] That is, not only are actual

Nobelists identified, but authors who later go on to win the prize are

also included.

It is remarkable that a simple, quantitative and objective algorithm can consistently anticipate a highly subjective and qualitative selection process. But this is not surprising, because citation data have been shown to correlate highly with other qualitative indicators of �prestige� or �eminence�, such as peer ratings, academy memberships and so on.[4],[5],[6],[7],[8],[9]

While rankings of the most-cited authors are fairly straightforward, great care must be taken when using citation data to evaluate the impact of the average individual. These evaluations can be both revealing and reliable, but only when performed properly � with expert interpretation, peer assessment, and recognition of potential artifacts and limitations.[10]

High impact papers and journals

One of the most obvious uses of citation data is to indicate particular

papers that have attracted the highest attention from other peer S&T

authors. By varying the time span of citation and/or publication, historical

�classics� and currently �hot� papers are readily identified. For example,

ISI has published a series of essays on most-cited papers in the 1945-1988

SCI

database.[11],[12] They

provide an interesting perspective on formal research communication for

S&T historians, sociologists, authors, editors, publishers, and so

on.

Identifying

�hot� papers through citation data enables S&T analysts to monitor

current breakthroughs at the forefront of research in various specialties.

For example, Table 2 lists the ten hottest biology papers at year-end 1991.

These and other such papers in different fields, specialties, and particular

research topics are derived from a special ISI database. It is a cumulative

three-year file, updated bimonthly, of about 1,000,000 papers that meet

two criteria. They were published within the previous 24 months in SCI-indexed

journals, and they were highly cited in the most-recent two months.

Identifying

�hot� papers through citation data enables S&T analysts to monitor

current breakthroughs at the forefront of research in various specialties.

For example, Table 2 lists the ten hottest biology papers at year-end 1991.

These and other such papers in different fields, specialties, and particular

research topics are derived from a special ISI database. It is a cumulative

three-year file, updated bimonthly, of about 1,000,000 papers that meet

two criteria. They were published within the previous 24 months in SCI-indexed

journals, and they were highly cited in the most-recent two months.

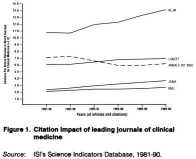

Aggregated

at the next level, citation data can also be used to indicate the highest-impact

journals in different fields and specialties and over varying time frames.

ISI�s Journal Citation Reports (JCR) volumes of the SCI and Social

Sciences Citation Index (SSCI) present a variety of quantitative rankings

on thousands of journals annually. From these data, sophisticated time-series

comparisons between journals can be made, as shown in Figure 1.

Aggregated

at the next level, citation data can also be used to indicate the highest-impact

journals in different fields and specialties and over varying time frames.

ISI�s Journal Citation Reports (JCR) volumes of the SCI and Social

Sciences Citation Index (SSCI) present a variety of quantitative rankings

on thousands of journals annually. From these data, sophisticated time-series

comparisons between journals can be made, as shown in Figure 1.

The chart shows the relative rankings by citation impact �

average

citations per paper � of the five leading clinical

medicine journals in the SCI database. In this example, impact was calculated

for six successive and overlapping five-year periods of publication and

citation, from 1981-1985 to 1986-1990. The impact of each journal was then

compared relative to the average for all SCI-indexed clinical medicine

journals.

Leading universities and corporations

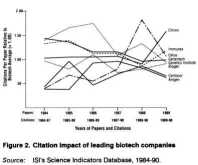

From the author affiliation and address data on articles indexed and cited in ISI�s databases, time-series rankings of leading institutions in different fields and specialties are available for S&T analyses. For example, the highest-impact universities and companies in electrical engineering are shown in Table 3. Figure 2 compares the relative impact of eight biotechnology firms from 1984-1990.

The

application of these citation-based institutional rankings and trends as

S&T indicators is obvious. For example, university administrators and

corporate managers can compare their performance with peers and competitors.

Government and private funding sources can monitor the return on their

S&T investment. Policy-makers can identify relative strengths and weaknesses

in strategically important S&T sectors.

The

application of these citation-based institutional rankings and trends as

S&T indicators is obvious. For example, university administrators and

corporate managers can compare their performance with peers and competitors.

Government and private funding sources can monitor the return on their

S&T investment. Policy-makers can identify relative strengths and weaknesses

in strategically important S&T sectors.

National comparisons

Of course, citation data can also be aggregated to the national level, enabling comparisons of entire countries on a variety of quantitative indicators for S&T analyses. In

Figure 3, the impact of the Group of Seven (G7) nations in engineering,

technology, and the applied sciences is charted from 1981 to 1990. The

trends provide a new perspective on relative S&T performance and an

additional quantitative basis for assessing and evaluating nations.

In

Figure 3, the impact of the Group of Seven (G7) nations in engineering,

technology, and the applied sciences is charted from 1981 to 1990. The

trends provide a new perspective on relative S&T performance and an

additional quantitative basis for assessing and evaluating nations.

Analyses of relative performance in �hot� research areas at the forefront

of a particular specialty are also possible through ISI�s citation databases.  For

example, Table 4 lists ten research fronts in which Japan and Germany dominate

and the USA is under-represented. They were derived from a 1990 file of

more than 8,000 specialty areas identified through co-citation analysis.

[13][14]

For

example, Table 4 lists ten research fronts in which Japan and Germany dominate

and the USA is under-represented. They were derived from a 1990 file of

more than 8,000 specialty areas identified through co-citation analysis.

[13][14]

Basically, each consists of a �core� of papers cited together frequently

by authors in 1990, and the current citing papers. The proportion of core

papers from Japan and Germany is at least twice the level expected from

their average representation in the entire 1990 file. In this example,

the research fronts are also ranked by three-year immediacy �

the

percentage of core papers published in the previous three years. These

and other research front rankings enable S&T analysts to compare national

performance in various areas of intrinsic interest, commercial potential,

or strategic importance.

Potential limitations

As stated earlier, citation data require careful and balanced interpretation to be most effective in S&T analyses.[15],[16] Like any quantitative indicator, citation data have inherent limitations. They are most obvious at the individual level � studies of a particular author or journal, for example. But their importance wanes at higher levels of data aggregation and larger sample populations: for example, comparisons of authors, journals, institutions and nations against appropriate baselines.A frequently raised question is whether citations reflect agreement or disagreement with the referenced paper. In the hard sciences, citations generally tend to be positive, representing the formal acknowledgment of prior sources that contributed to the citing author�s research. Of course, there are occasional exceptions, such as the cold fusion controversy, but these are well known and obvious. In the social sciences, however, critical citations are more common. Thus, raw citation counts may not be indicative of an author�s or paper�s positive impact in the social sciences, and the context and content of citations should be examined.

Self-citation is another frequently raised caveat. That an author cites his or her own prior research is a legitimate and expected practice, since science is a cumulative process that builds on past findings. But excessive self-citation may lead to inflated impact rankings of authors or papers. Presumably, excessive self-citation would become apparent, and be corrected, in the editorial and peer review process. In any case, self-citations are readily identified and can be subtracted from or otherwise weighted against an author�s or paper�s total citation count.

Citation circles are related to the phenomenon of self-citation. That is, groups of researchers might theoretically �conspire� to preferentially cite only the work of authors in the group. However, in order for this to unfairly �skew� citation and impact rankings, authors in a purported citation circle must be rather prolific, that is, they must publish a substantial number of papers in order to �inflate� the group�s ranking.

While citation circles are much talked about, they are rarely, if ever, documented and identified. The problem is, it would be difficult to distinguish between a citation circle and an invisible college (colleagues who legitimately share common research interests and build on (and cite) one another�s papers). This is especially true in small and emerging subspecialties in which a comparatively small group of authors are active.

Another purported shortcoming of citation analyses is that methods tend to be identified far more frequently than theoretical papers. This perception is not necessarily supported by ISI studies of the most-cited papers or authors in various fields �breakthrough theoretical contributions appear in these rankings. This perception also reflects a curious prejudice of scientists, who seem to value theory more highly than methods.

Practically speaking, new methods and technologies that enable researchers to study phenomena previously inaccessible by conventional techniques or that allow them to conduct research more quickly, efficiently and cost-effectively are indeed valuable contributions that deserve recognition. In fact, the Nobel Prizes have honored breakthrough methods and technologies, for example, computerized axial tomography, scanning and tunneling electron microscopy, and so on.

The obliteration phenomenon must also be taken into account when applying citation data to S&T evaluations. This refers to a well-known process in which breakthrough advances � for example, Einstein�s theory of relativity or Watson and Crick�s description of DNA�s double-helix structure � are paradoxically cited less frequently over time.

Such landmark discoveries are quickly incorporated into the generally-accepted body of scientific knowledge, and authors no longer feel the need to explicitly cite the original paper. However, citation obliteration tends to occur many years after the paper was published; in the first few years, these papers achieve extraordinary citation frequencies and are thus easily identified as �hot� or breakthrough contributions.

Lastly, publication and citation data are �lagging indicators� of

research that has already been completed and passed through the peer review

and publishing cycle, which can take as long as two years, depending on

the field. Of course, especially important papers can appear in print within

weeks of submission to a journal, and they become �hot� or very highly

cited almost immediately. In any case, citation data still represent the

scientific community�s current assessment of the impact of earlier research.

Thus, citation data retain their value for S&T evaluations, since they

indicate what is considered important in the opinion of investigators currently

active in the field.

Conclusion

In conclusion, publication and citation data offer the potential to develop new quantitative, objective indicators of S&T performance. While they have their limitations as do any quantitative indicators, most, if not all, of these limitations can be statistically weighted, controlled or otherwise compensated. Properly applied, interpreted, and analyzed, citation data are a valuable and revealing addition to conventional methods � both quantitative and qualitative used in the S&T evaluation and assessment process.References

1. back to text E Garfield, "Which medical journals have the greatest impact?", Annals of Internal Medicine, 105, 1986, pages 313-320. pdf available2. back to text E Garfield, "Science Watch: ISI�s highly cited newsletter shows how quantitative assessments of the literature can contribute to R&D management, policymaking, and strategic analysis", Essays of an Information Scientist: Journalology, KeyWords Plus, and Other Essays, vol 13 (ISI Press, Philadelphia, 1991) pages 440-446.

3. back to text E Garfield and A Welljams-Dorof, "Of Nobel class: a citation analysis of high impact authors", Theoretical Medicine, 13, 1992, pages 117-135. pdf available -Part 1 -Part 2

4. back to text J R Cole and S Cole, Social Stratification in Science (University of Chicago Press, Chicago, 1973).

5. back to text J R Cole and S Cole, "Scientific output and recognition: a study in the operation of the reward system in science", American Sociology Review, 32,1967, pages 377-390.

6. back to text A E Bayer and J Folger, "Some correlates of a citation measure of productivity in science", Sociology Education, 39, 1966, pages 381-390.

7. back to text S M Lawani and A E Bayer, �Validity of citation criteria for assessing the influence of scientific publications: new evidence with peer assessment", Journal of the American Society of Information Science, 34(1), 1983, pages 59-66.

8. back to text L C Smith, "Citation analysis", Library Trends, 30, 1981, pages 83-106.

9. back to text J A Virgo, "A statistical procedure for evaluating the importance of scientific papers", Library Quarterly, 47,1977, pages 415-430.

10. back to text E Garfield, "How to use citation analyses for faculty evaluations, and when is it relevant? Parts 1 and 2", Essays of an Information Scientist, vol 6 (ISI Press, Philadelphia, 1984) pages 354-362; 363-372.

11. back

to text E Garfield, "The most-cited papers of all

time, SCI 1945-1988"

"Part lA. The

SCI top 100 � will the Lowry method ever be obliterated?",

"Part 1B.

Superstars

new to the SCI top 100",

"Part 2. The

second 100 Citation Classics",

"Part 3. Another

100 from the Citation Classics hall of fame" ,

Essays of an Information Scientist: Journalology, KeyWords Plus,

and Other Essays, vol 13 (ISI Press, Philadelphia, 1991) pages 45-56;

57-67; 227-239; 305-315.

12. back to text E Garfield, "The most-cited papers of all time, SCI 1945-1988. Part 4. The papers ranked 301-400", Current Contents, 21,27 May 1991, pages 5-16. pdf available

13. back to text H Small, "Co-citation in the scientific literature: a new measure of the relationship between two documents", Journal of the American Society of Information Science, 24,1973, pages 265-269.

14. back to text H Small and E Garfield, "The geography of science: disciplinary and national mapping", Journal of Information Science, 11, 1985, pages 147-159.

15. back to text E Garfield, "Uses and misuses of citation frequency", Essays of an Information Scientist: Ghostwriting and Other Essays, vol 8 (ISI Press, Philadelphia, 1986) pages 403-409.

16. back to text E Garfield, "Citation Indexing: Its Theory and Appilcation in Science, Technology, and Humanities"(John Wiley, New York, 1979).