THE USE OF JOURNAL IMPACT FACTORS AND CITATION ANALYSIS FOR EVALUATION OF SCIENCE

the 41st Annual Meeting of the Council of Biology Editors, Salt Lake City, UT,

May 4, 1998 - April 17, 1998

Eugene Garfield

Chairman Emeritus

Institute for Scientific

Information®

3501 Market Street

Philadelphia, PA 19104,

USA

Publisher, The Scientist®,

3600 Market Street

Philadelphia, PA 19104, USA

Tel. 215-243-2205

email: garfield@codex.cis.upenn.edu)

JOURNAL IMPACTS AS SURROGATES

So from the outset, let me state the obvious. It is dangerous to use any kind of statistical data out of context. The use of journal impact factors as surrogates for actual citation performance is to be avoided, if at all possible. However, let me give you a few examples when it was not possible and was justifiable.

Several years ago, the Soros Foundation wanted to help Russian scientists in desperate need of funds. How could they, within a three-month period, decide which of 20,000 applicants should receive financial aid?

An arbitrary decision was made � if the applicant had published a paper in a journal with an impact of X, he or she would receive an immediate short-term stipend.

As you can imagine, those Russian scientists who regularly published in international high impact journals had an advantage over those who exclusively published in Russian language journals or other low impact journals. Few Russian language journals achieve better than average impacts. Those that are translated cover to cover do somewhat better, but nothing like what one might assume. Many of my friends, e.g. in the small field of marine biology, were initially hurt by this policy. Even many English language journals in that field do not achieve impacts comparable to fields like physics, biochemistry, etc. But as time passed and normal peer review procedures could be implemented, scientists even in small or low-impact fields were better accommodated.

It is reasonable to expect that higher impact work is generally reported in English and not in vernacular languages. But surely the Soros Foundation could not say that it would not fund Russian scientists who had exclusively published in Russian. Some Soviet physicists and chemists benefited because cover-to-cover translation journals not only promoted their work, but in some cases artificially increased their impact. The translated versions systematically cited the original Russian papers. However, it is widely believed that if your work is available in English, this alone may increase its citation. We have no significant verification of that. One recent study of the Pasteur Institute journals claims that switching from French to English had no discernible effect on impact but that would imply that only French scientists writing in English or French did the citing.1.

Let me cite another example. Over 20 years ago, the then new director of the Italian National Research Council adopted a policy similar to that of Soros. However, he established a very low threshold of journal impact. He did not even inquire whether the individual�s work had ever been cited. He was not surprised to learn how many Italian clinical researchers had not published a paper at all, no less in low-impact journals. And many who did publish chose vernacular journals mainly supported by the pharmaceutical industry.

It is well known how politics has affected Italian research funding policies in the past, but today the situation has improved. The interest in journal impact in that country may have started with Professor Luigi Rossi-Bernardi but has accelerated. The recent publication of a book in Italian covering this phenomenon is significant.2 The Weight of Academic Quality by Spiridione and Calza was discussed at a G7 Conference in Capri about two years ago. The authors clearly understand how to use citation data appropriately.

So the use of journal impact

factors as surrogates can be justified in certain situations. The use in

these cases is simply another way of determining that a scientist had or

had not published in a journal of minimal prestige. The mere acceptance

of a paper in such a journal makes a statement. Even if that paper is never

cited, the fact that a respected peer-reviewed journal accepted it, means

that the scientist met some minimum international standard. Now we know

that this generalization is not always true. I regularly see papers that

should never have been published. But we hope that the peer review process

minimizes the publication of trivial, me-too salami-slice science. I will

leave it to Per Seglen and others to beat the drum further on the question

of using journal impacts as surrogates for real citation evaluation. (Figure

1)

Figure 1 |

Let me turn to the kind of quantitative and qualitative evaluation I have pursued for almost four decades. In 1961, we had to make quick decisions about which journals to cover in the Science Citation Index.® We had some experience with Current Contents® so we were especially interested in current impact. Over the past 35 years, ISI�s journal coverage has grown from 600 to over 8,000 journals. Can anyone seriously question that the most-significant journals are included? Of course, there still are uncovered, as sources, small journals, which may be considered important to a particular nation or specialty. But this alone does not prevent them from being cited.

There are always new journals arising, and now, even purely electronic journals. ISI® is always running just to keep in place. Medline has a similar problem. But there can be little doubt that by using ISI�s own database, combined with knowledge of experts, the key journals will be identified. But the task of keeping up is not trivial.

There are at least three ISI products which report on journal performance and journal-to-journal relationships. The best known is Journal Citation Reports.® In the next series of slides, I�ve extracted information from JCR® for 1996. The 1997 edition won�t be ready until the fall. JCR has been around for over 20 years so I won�t go into any details. Contact Janet Robertson at ISI if you have any problems.

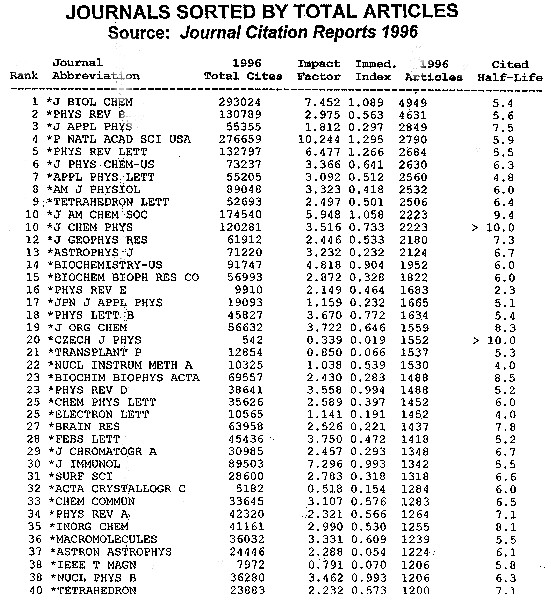

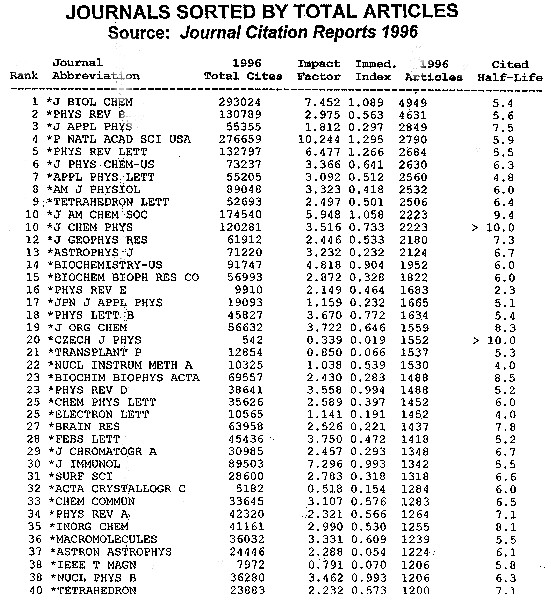

Figure 2 shows 40 journals

ranked by article output and includes all those publishing 1200 or more

items per year. This list is dominated by the physical sciences where big

journals are a tradition.

Figure 2 |

In Figure 3, we�ve listed

the top 40 biomedical journals with 700 or more articles per year. I won�t

comment here on immediacy or cited half life.

Figure 3 |

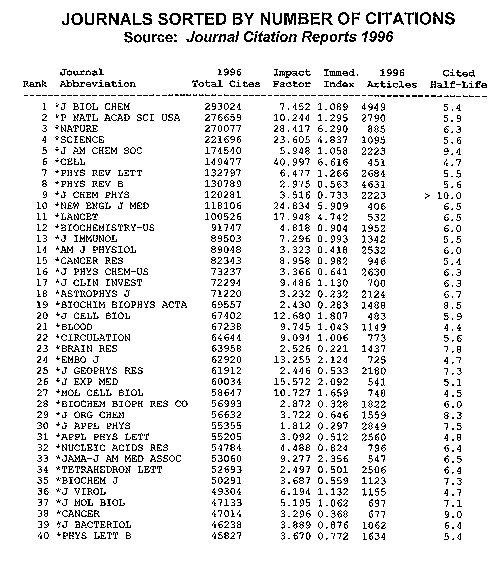

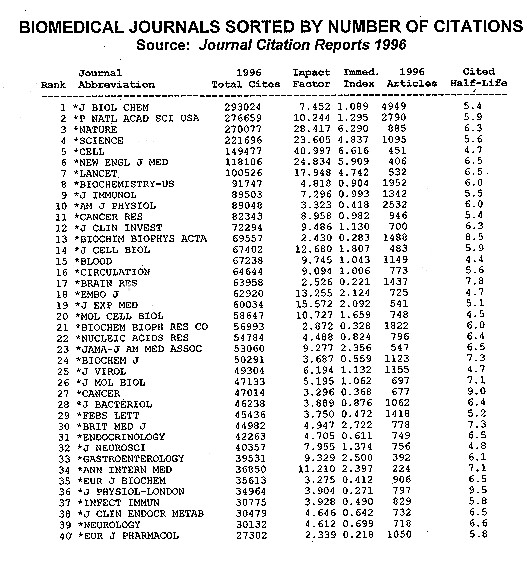

Figure 4: Here we�ve ranked

all journals by total number of citations received in 1996 regardless of

the year cited. This would favor old established journals. Over 45,000

citations qualify here.

Figure 4 |

In Figure 5, we�ve selected

biomedical journals. Over 27,000 cites qualifies here.

Figure 5 |

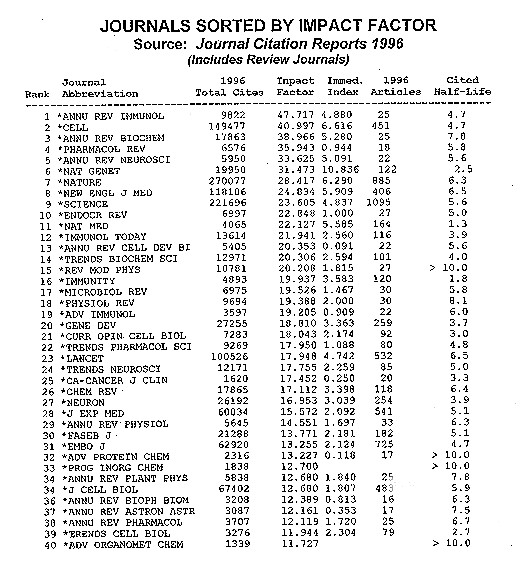

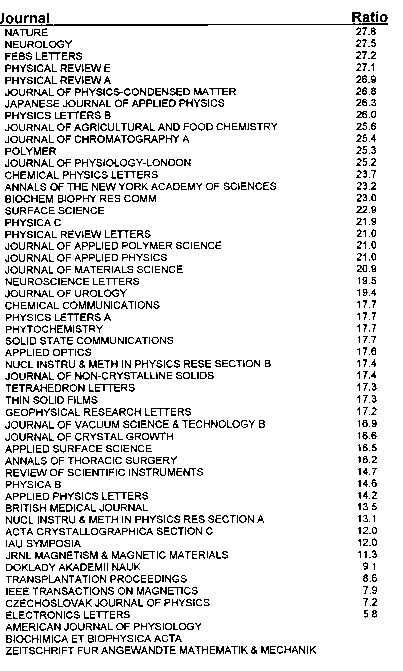

In Figure 6, we�ve sorted

journals by impact factor. This favors the review journals. Please ignore

the three decimal places as they have no significance.

Figure 6 |

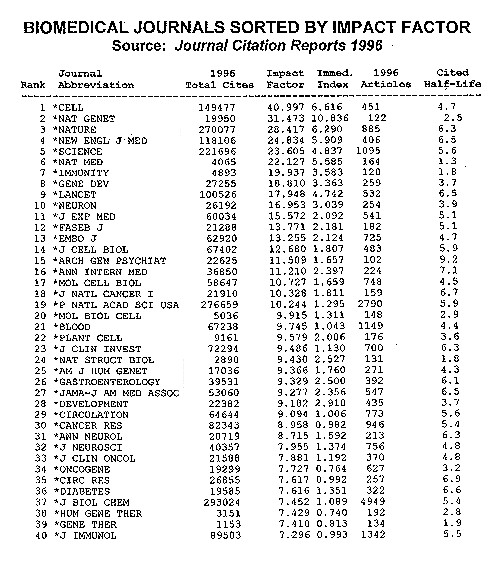

In Figure 7 we have eliminated review journals and again limited the list to biomedicine.

Figure 7 |

For decades, many editors complained that current two-year impacts provide a distorted picture and tend to favor journals in hot fields like molecular biology. The fact is that this was its intent as these journals were the prime focus of Current Contents.®

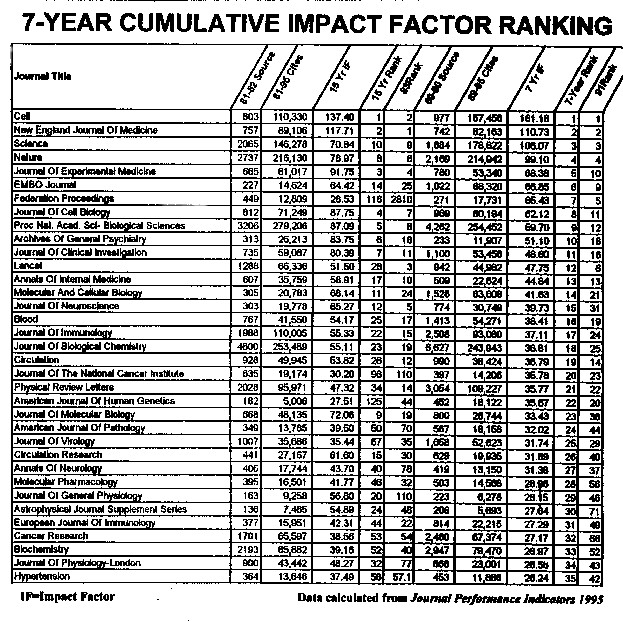

The next series of six slides provides a new perspective on impact. Instead of two-year impacts, we�ve calculated 15-year vs. 7-year impact data. The first 100 journal list was published in The Scientist on February 2, 1998. You can download the file from The Scientist�s website and study it more carefully.3 All six slides relate to 15-year impacts for articles published 1981-2. We then compare the new ranking to that for the 1983 impact factor.

There are some interesting surprises

in the new rankings but overall it is too early to tell its significance

within each category. The field of physiology shows up much better in the

long-term vs short-term, but I don�t think the rankings within the category

change much.

In Figure 9, I�ve sorted the file by 7-year cumulative impact. Surprisingly, some of the

7-year impacts are higher than

the 15-year impacts due to a number of factors including the growth of

the literature of about 4% per year.

Figure 9 |

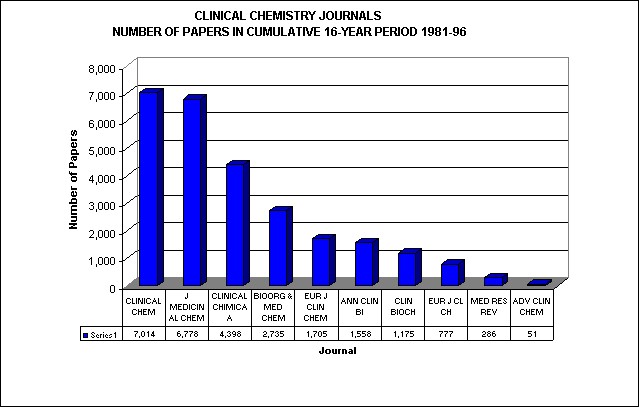

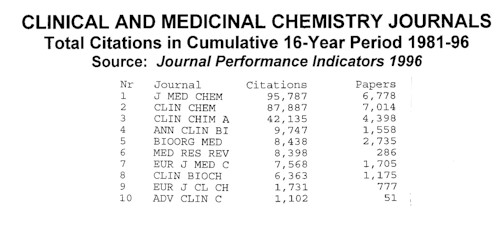

In the next figure, I�ve illustrated

what the Journal Performance Indicators™ can

show for the category of journals called Clinical and Medicinal Chemistry.

As I will demonstrate, this is a dubious grouping.

Figure 10 |

Figure 11 provides another

perspective for the 16-year period.

Figure 11 |

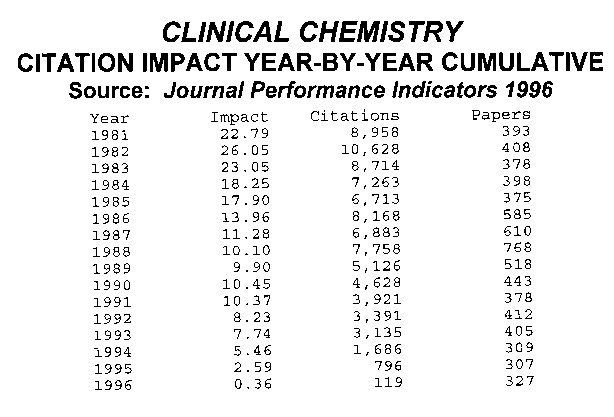

Figure 12: provides year-by-year

cumulative impact. Some years may be affected by super-cited papers.

Figure 12 |

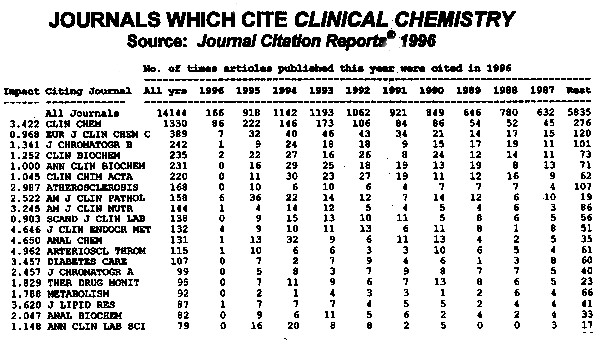

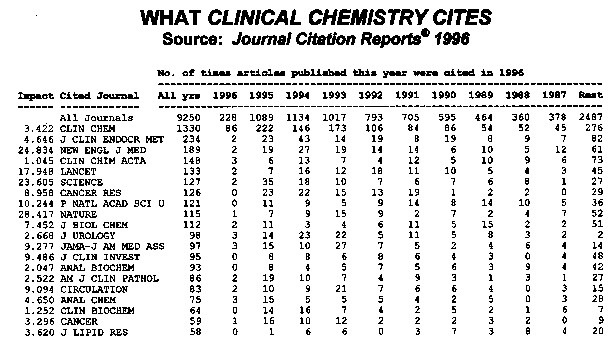

Switching back to the JCR,

Figure

13 demonstrates how JCR helps you visualize journal-to-journal

interactions and demonstrates that lumping medicinal and clinical makes

little sense.

Figure 13 |

The data in Figure 14

show how diffused Clinical Chemistry is and how heavily the high impact

biomedical journals are cited.

Figure 14 |

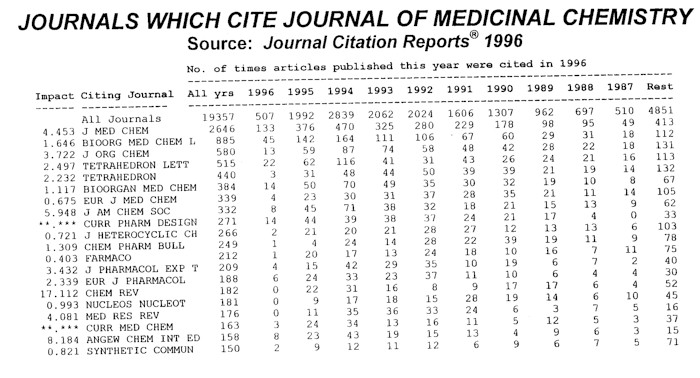

By comparison, the field of medicinal

chemistry is highly dependent upon organic chemistry and pharmacology.

Figure 15 |

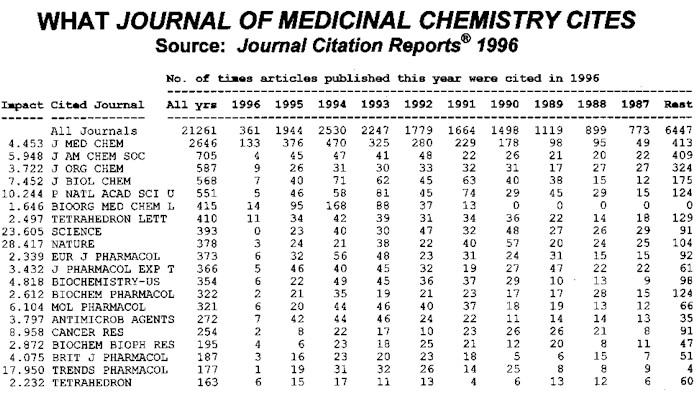

Figure 16 shows that the

journal is connected to biochemistry in what it cites but the biggest contributors

are JACS and the Journal of Organic Chemistry.

Figure 16 |

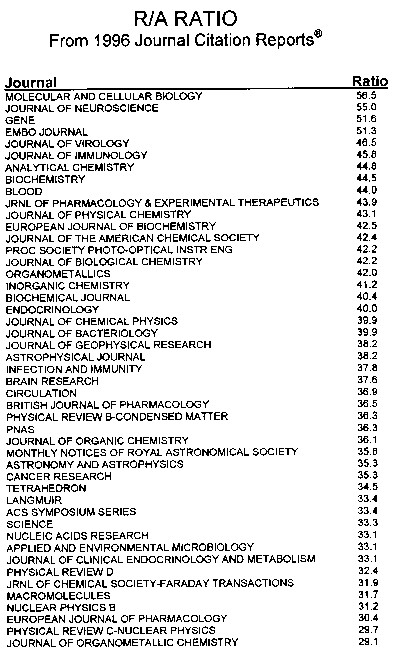

Figures 17A & 17B: R/A TABLE:

These data are taken from the

Journal

Citation Reports. There is considerable variation in the R/A ratio.

Figure 17A |

Figure 17B |

Myth about Size of Field and Impact Factor

The Journal of Biological Chemistry published about 10,000 papers in 1994 and 1995. These papers were cited 71,167 times in 1995. So its current impact factor was 7.45. However, it ranked 85th by impact even though it published more articles in 1996, and over the past 20 years, than any other journal in the world. If size were the primary determinant, this journal should have the highest impact. But that is the point. Size alone of the biochemical or any other literature per se does not determine impact. A large field does indeed produce many citations, but there are also more papers that need to share those citations, so more citations are needed to keep up the average.

If the size of a literature does not determine impact, what does? As the slide shows, in 1996, JBC articles cited an average of 42 references per source article. It also had a relatively high immediacy of 1.1. For a detailed discussion of this point, see my paper in Current Contents many years ago.4So it is the number of references per article and their recency which largely determines current impact. A more recent discussion appeared last year in The Scientist.5

Let me add another point about social science and other applied fields where the number of non-journal references is quite high. Fifty percent of the average social science journal articles are to books and other non-journal references.

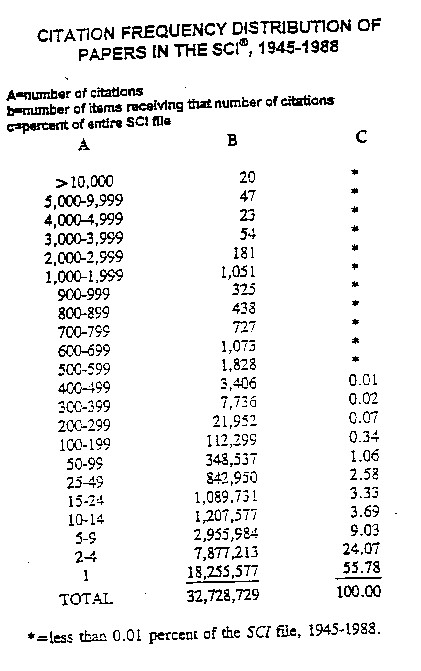

Figure 18: shows citation frequency distribution of papers in the SCI 1945-1988.

The size of a literature also

has a significant effect in determining the number of papers that qualify

as Citation Classics. These data show that only about 10,000 papers

qualified as Citation Classics back in 1988.

Figure 18 |

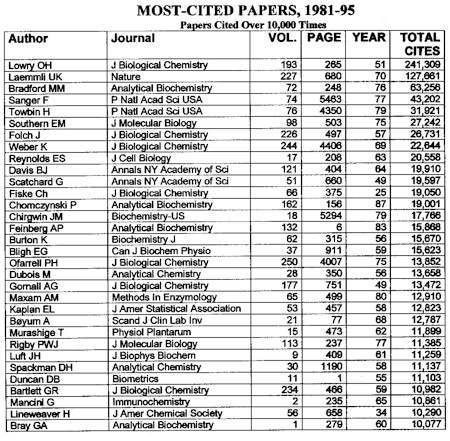

Figure 19: -- MOST-CITED PAPERS, 1981-95 -- will show, these are dominated by journals in large fields. In particular, the method papers are usually what I call Super Citation Classics. All of these were cited over 10,000 times for 1981-95.

To reiterate -- the size of the

literature does affect the number of Citation Classics one can expect.

With a large population of papers, there is the much greater probability

for highly cited papers to occur.

Figure 19 |

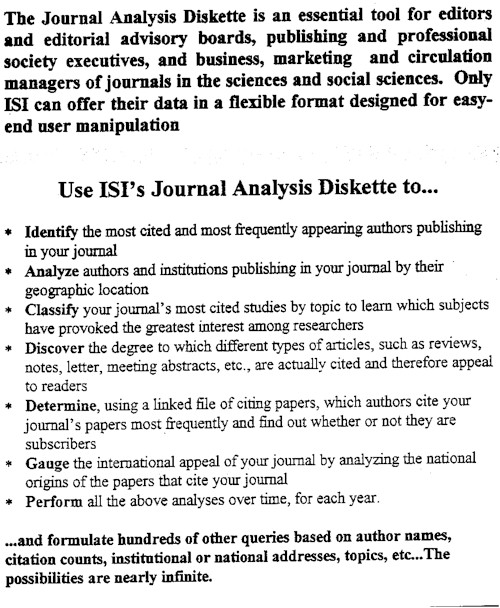

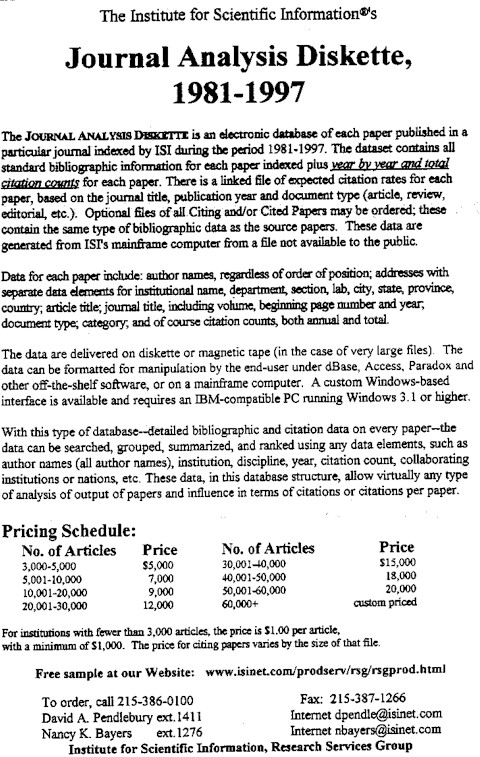

Figures 20 & 21 give information on Journal Analysis Diskettes

Let me wind up by mentioning

a third source of ISI journal data called Journal Analysis Diskettes.™

These are essentially comprehensive selections of data the ISI file and

provide an article-by-article audit for each paper published.

Figure 20 |

Figure21 |

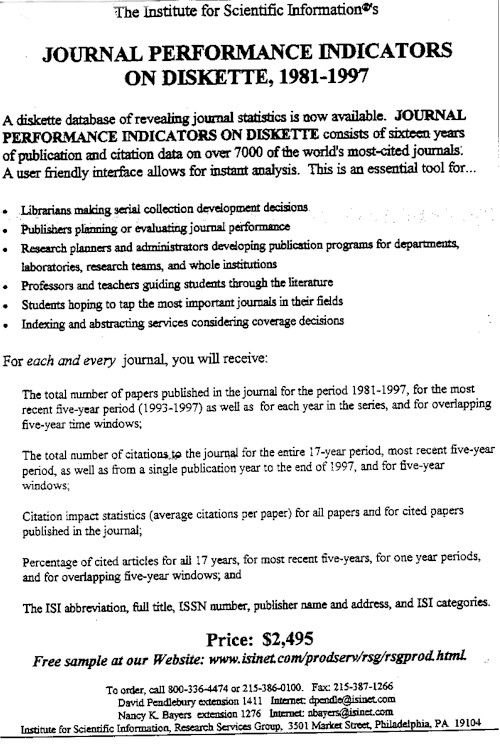

Figure 22: JOURNAL PERFORMANCE

INDICATORS ON DISKETTE

Figure22 |

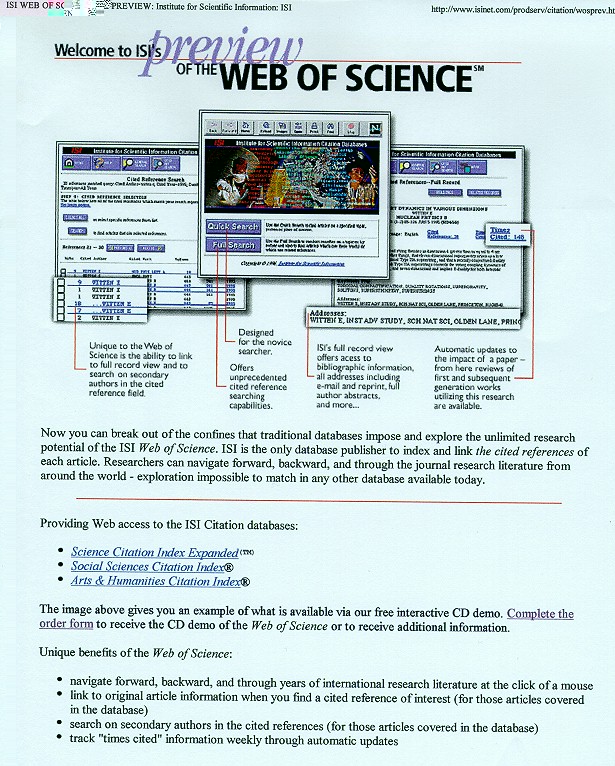

Figure 23: WEB OF SCIENCE

Figure23 |

As a concluding remark, let me

mention that a new source of convenient data on journals is the ISI Web

of Science. This 25-year database is available on the Internet. I understand

that after my talk there is time for demonstrations using the live files

but if you can�t make the demo, you can try it out from home or contact

your librarian to find out if it is part of the many consortia that already

subscribe. As a faculty member at Penn, I can access this file.

REFERENCES:

1. back to text BrachoRiquelme RL, PescadorSalas N, & ReyesRomero MA. "Bibliometric repercussions of adopting English as the language of publication," Revista de Investigacion Clinica 49(5):369-372 (September-October 1997).2.back to text Spiridione, G. & Calza, L. IL Peso Della Qualita Accademica Cooperativa Libraria Editrice Universita di Padova, 1995, 123pgs.

3. back

to text Garfield, E. "Long-term

vs short-term journal impact factor: Does it matter?

The Scientist 12(3):10-12 (February 2, 1998).

4. back to text Garfield, E. "Is the ratio between number of citations and publications cited a true constant?" Current Contents No. 8: 5-7 (February 9, 1976). Reprinted in Essays of an Information Scientist, Volume 2. Philadelphia: ISI Press, pgs. 419-421, 1977. pdf file available

5. back to text Garfield, E. "Dispelling a Few Common Myths About Journal Citation Impacts," The Scientist 11(3):11 (February 3, 1997).