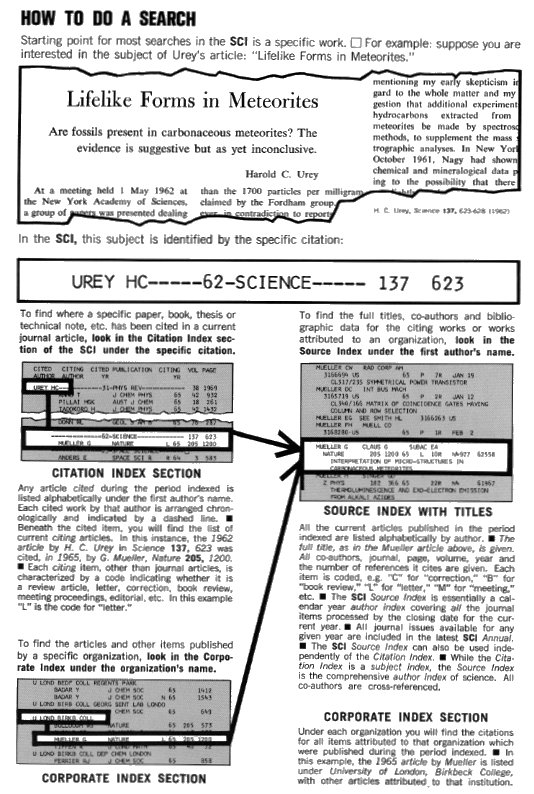

FIGURE 1: HOW

TO DO A SEARCH -- HAROLD UREY EXAMPLE

Eugene Garfield,

Chairman Emeritus, ISI®

Publisher, The

Scientist®

3501 Market Street

Philadelphia, PA 19104

Tel. 215-243-2205

Fax 215-387-1266

email: garfield@codex.cis.upenn.edu

Home Page: http://garfield.library.upenn.edu

at

Consensus Conference

on the

Theory and Practice

of Research Assessment

Capri

October 7, 1996

__________________________________

As Dr. Salvatore and Susan Cozzens are aware, when I was first approached to give this talk, the original manuscript for this talk had been submitted for publication over one year ago and is now being evaluated at Nature. Its primary purpose was to provide a synoptic history of citation indexes for information retrieval, information dissemination, and writing the history of contemporary science. In that context scientometrics and bibliometrics are treated simply as a by-product of Science Citation Index® production.As you know, there is now a substantial industry involving citation studies. Many of them, for your purposes, are somewhat irrelevant, e.g., those involving the selection and deselection of journals by research libraries, journal evaluation and ranking by editors and publishers, or tracing the lifetime impact of individual scholars. My presentation today essentially avoids discussion of research evaluation methods, per se. This may seem strange to you but such methodologies for research evaluation will be dealt with extensively by others at this conference.

However, at the outset I will refer to validating studies of citation analysis. This was epitomized by the recent huge report published by the National Academy of Sciences titled Research Doctorate Programs in the United States - Continuity and Change.1 Using an extensive questionnaire technique addressed to most academic research institutions in the USA, the tabulated results were correlated with citation and publication analyses and concluded that:

"The clearest relationship between ratings of the 'scholarly quality of program faculty' and these productivity measures occurred with respect to 'citation' - with faculty in top-rated programs cited much more often than faculty in lower-rated programs who published." Questionnaire surveys are but one of many different subjective approaches to research evaluation.Cornelius Le Pair has stated succinctly the approach to citation analysis that I have always supported: "Citation Analysis is a fair evaluation tool for those scientific sub-fields where publication in the serial literature is the main vehicle of communication.2

It's important to recognize the ambiguity of the term "Research Evaluation." Sometimes it refers to faculty evaluation, other times graduate research programs. Others, like granting agencies, are doing research evaluation of particular areas of science. In all these studies methodologies for the proper identification of specialties and sub-specialties (invisible colleges) is crucial. The work of Henry Small, Callon, Van Raan, and others on co-citation and co-word clustering is important to note. Any citation analysis for research evaluation must take advantage of such methods to provide an informed decision for funding or award purposes.

The idea of a citation index for science was the culmination of my investigation of the linguistic and indexing characteristics of scientific review articles and a serendipitous encounter with Shepard's Citations. Both these inspirations resulted from interaction with established scholars. My initial interest, which soon became a preoccupation, was aroused by pharmacologist/historian Chauncey D. Leake.3,4 My introduction to the U.S. legal citation system came from a retired vice president of Shepard's Citation, W. C. Adair,5 who wrote to me about in March 1953, towards the close of the Johns Hopkins Welch Medical Indexing Project, of which I was a member.

When the Project closed in June, I enrolled in the Columbia University School of Library Service. There, early in 1954, I wrote a term paper proposing the creation of citation indexes. After much revision and help from Johns Hopkins biologist Bentley Glass, it was published in Science6 in 1955. Its primary aim was to improve the retrieval of science information. That the putative Science Citation Index®(SCI®) should be unified, that is multi-disciplinary, and each journal indexed cover-to-cover was further reiterated in a paper I presented at the 1958 International Conference on Scientific Information.7

At that time, there was widespread dissatisfaction with the array of traditional discipline-oriented indexing and abstracting services. They were all inordinately late. Indexing was inconsistent and uncoordinated. Selection policies left major gaps in coverage.

The Impact Factor

Only a few lines of my 1955 Science paper referred to the "impact factor" of individual research papers. The idea of average citation frequencies, that is, journal impact factors, now so widely used for evaluation analyses, did not develop for more than a decade.8, 9 Ironically, in view of my stated objective, these impact measures have received much greater attention in the literature than the proposed use of citation indexes to retrieve information. This is undoubtedly due to the frequent use and misuse of citations for the evaluation of individual research performance -- a field which suffers from inadequate tools for objective assessment. While there are countless legitimate applications of citation data, in the hands of uninformed users, unfortunately, there is the definite potential for abuse.

It is safe to say that journal impact factors, as reported each year since 1979 in the SCI and Social Sciences Citation Index®(SSCI®) Journal Citation Reports® have been the most widely used derivative metric of citation analysis. They are extensively used by libraries for journal selection and weeding and by faculty selection committees as part of the evaluation of individual performance.

Validating Studies of Citation Analysis

There is a huge literature on citation analysis. But there are only a few studies that could be called "validating," that confirm its value in literature searching or evaluation research. This lack of extensive validating studies has not affected its pragmatic utilization for these purposes. The work of Julie Virgo in 197710 demonstrated a high correlation between citation analysis and peer judgment in identifying research-front leaders in cancer.

"The purpose of this study was to develop, using objective criteria, a statistical procedure to evaluate the importance of scientific journal articles. Two approaches were taken using articles from the field of medicine. The same year, Henry Small performed a similar study for the field of collagen research.11 Through a longitudinal study of co-citation linkages, he identified the most important advances in collagen research over a five-year period. The results were validated by questionnaires. The survey "demonstrated that the clustered, highly-cited documents were significant in the eyes of the specialists, and that the authors of these papers were, by and large, the leading researchers identified as such by their peers.""The first tested the specific hypothesis that journal articles rated important by subject experts would be cited more frequently in the journal literature than would articles judged to be less important. The hypothesis was tested by determining the extent to which a measure based on citation frequency could predict the subject experts' opinion on the importance of papers presented to them in pairs (one pair member was an infrequently cited paper and the other a frequently cited paper). The experiment showed that citation frequency was able to consistently predict the more important paper.

"To determine which other factors were associated with articles judged important, a stepwise regression analysis was made. Although ten variables were considered, only two were significantly related to the differences between articles that had been rated on a scale of one to five of importance. While citation frequency had been a strong predictor of pair-wise judgments about the importance of articles, the regression equation performed even better in agreeing with judges' ratings.

"The design of this study called for judgments to be made on pairs of articles, one pair member being an infrequently cited paper and the other a frequently cited paper, since using extremes maximized the chance of detecting small effects.

"It is suggested that a potentially fruitful area for further research would be to obtain judge ratings on sets of articles coming from a variety of citation frequencies, not just extremes. Using the regression equation obtained in the present study, importance predictions about each of the articles could be made and compared with the subject experts' opinions about the articles. In addition, similar studies in other areas of science should be carried out to determine the applicability of the approach used in the present study, to subject areas other than that of medicine."

In 1983 Michael Koenig published bibliometric analyses of pharmaceutical research.12, 13 The second of this series involved a comparison between bibliometric indicators of expert opinions in assessing the research performance of 19 pharmaceutical companies. He concluded inter alia that expert judgments were very predictable from the bibliometric measures, while the converse relationships were not.

But much larger validation studies based on large populations across most academic disciplines had already been conducted earlier by the sociologist Warren Hagstrom.14 In his paper he used citation counts from the 1966 Science Citation Index which was just two years after the service was launched. Publications and citations were determined to be the two leading determinants in an analysis of department quality indicators in 125 university departments.

These researchers compared the results found through questionnaire surveys of U.S. faculties with the results of citation analyses. And several subsequent NRC studies have combined publication productivity and citation data with peer surveys including the most recent highly publicized NAS report.1

During the past two decades, dozens of papers have been published that use bibliometric data to identify leaders in various specialties by measuring article productivity, citation impact, and most-cited papers. When used in combination with peer judgments, the overall validity of these studies is rarely questioned. The most recent example is the work of Nicolini et al.15

Nicolini describes an evaluation of 76 candidates for university chairs in biophysics and related disciplines. He correctly points out that the evaluation of a person by scientometric methods is complex and needs more precautions than bibliometric analyses on countries, institutions, or groups. Since this work is so recent and known to so many present, I will not repeat his conclusion (p. 106) about the relevance of such studies provided the proper normalization procedures are followed.

Comparative validation studies in information retrieval have also been limited.16, 17 Apart from retrieval and research evaluation, there have been hundreds of applications of citation data in studies designed to test various hypotheses or conjectures or to identify key people, papers, journals, and institutions in various scientific and scholarly specialties. Not surprisingly, fields like economics and psychology, where quantitative measures of human behavior are the norm, have produced a large fraction of such studies. Various correction factors have been devised to improve identified discrepancies between quantitative citation studies and human peer judgments. The need to account for age and other differences was predicted by Normal Kaplan,18Margolis,19 and others.

Information Retrieval

At its official launch in 1964, and for another decade,20,21 the utility of the Science Citation Index as a retrieval and dissemination device was hotly debated in library circles, but it is rarely questioned today. We do not know the precise extent of its current use for information retrieval. But we do know that it is frequently used in most major research libraries of the world, in print, CDs, or online. Nevertheless, it is a sobering commentary on the conservative nature of education in science, medicine, and the humanities that only a fraction of scientists, physicians, or scholars ever receive formal instruction in the use of citation indexes. With rare exceptions, researchers do not encounter SCI, or SSCI,®or Arts and Humanities Citation Index® (AH&CI®) until they enter graduate school. A few liberal arts institutions have incorporated such training into undergraduate instruction, but the work of Evan Farber at Earlham College is the exception rather than the rule.22 At some large research universities, such as Purdue or Michigan State University, where chemical literature searching has been taught for some time, use of SCI is covered routinely, especially as a means of augmenting searches begun with other indexing services.

FIGURE 1: HOW

TO DO A SEARCH -- HAROLD UREY EXAMPLE

A key advantage of citation indexing from the outset was its capacity to bypass the use of normal linguistic forms such as title words, keywords, or subject headings. In 1978, Henry Small described the symbolic role played by the citation in representing the content of papers.23 In combination with various natural language expressions, citation indexes greatly improve comprehensive literature searches. I have often described the SCI as a tool for navigating the literature24 but the fundamental retrieval function of the citation index is to enable the searcher to locate the subsequent and especially the current descendants of particular papers or books. Each starting paper is used to symbolize a unique concept, from the simplest to the most complex of ideas of procedures. The Citation Index user frequently wants to focus initially on retrieving only those papers that have cited that primordial work. Once the citing papers are retrieved, the scope of the search can be expanded by using the papers they cite or other related papers as entry points to the Citation Index. These starting references can also be supplemented by using the Permuterm® Subject Index section of the SCI25 or other keyword indexes.Citation Consciousness

In principle, all scholars and editors ought to be asking about any publication of interest to them, "Has it been cited elsewhere?" Such citation consciousness is especially important when reviewing what is cited in newly submitted manuscripts. The routine exercise of the cited reference search in the citation index would help prevent much unwitting duplication and the alleged widespread failure to cite relevant literature.26 John Martyn's classic study in 1963 indicated that there was 25% inadvertent duplication in research.27In any event, routine checks of citation and other indexes would help reduce such duplications. The attention recently devoted to misconduct in science has given greater impetus to the notion that authors should explicitly declare that they have searched the literature.28 The issue of "When to Cite" is complex.20, 29

A fundamental dilemma arises when routine searches of citation indexes are based on what is cited in new manuscripts. How will the search identify highly relevant references that are not known to the author or the referees and were not found by traditional keyword or KeyWords Plus30 searches? Experience tells us that relevant material is frequently missed. An idea can be expressed in many different ways that defy the normal search procedure. The advantage of citation indexing in overcoming these linguistic barriers has been extensively discussed and documented; Henry Small's review of the citation as symbol, mentioned above, is only one example.23

The answer to the dilemma is found in the natural redundancy of reference lists. Each new research manuscript, depending upon the field, normally contains from 15 to 35 references. What is the chance that a "missing" relevant reference will not be found by searching citation indexes to determine whether any of the papers cited by the author are cited elsewhere? Such procedures have sometimes been called "cycling."31

Routine Citation Checks in Refereeing

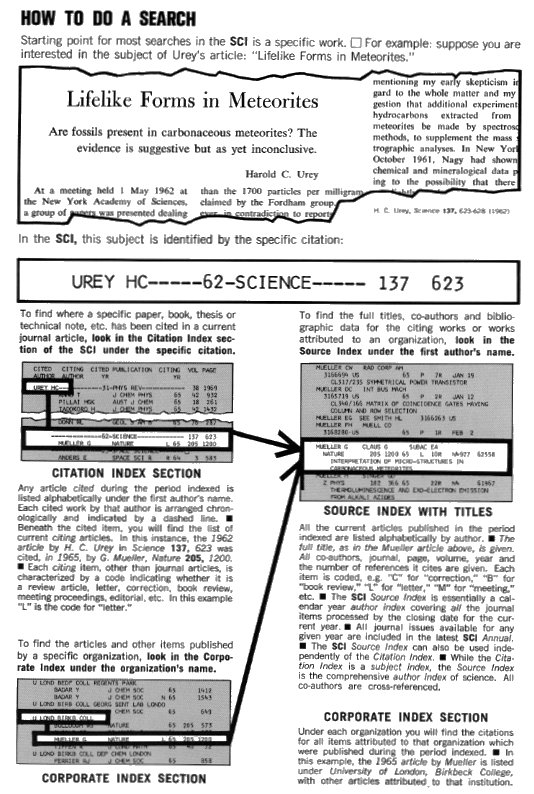

FIGURE 2: BASIC SEARCH TECHNIQUE WITH STARTING REFERENCE

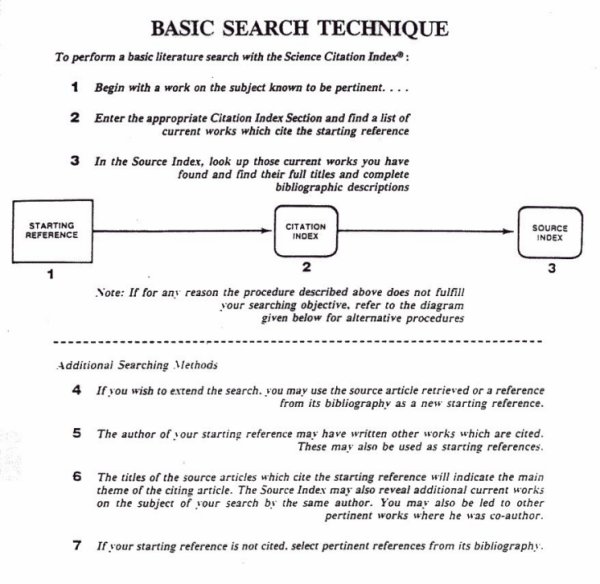

FIGURE 3: WITHOUT STARTING REFERENCE

FIGURE 4: SCI SEARCH FOR DUPLICATED RESEARCH

One of the earliest examples I used to demonstrate cycling20, 32 involved an apology published in Analytical Chemistry. The authors33 had not known about another paper28 that had anticipated their work. Upon examining the two papers in question, I observed that half of the references they cited had also been cited by the earlier authors. This co-citation pattern is quite common, indeed pervasive, in scholarship. Its prevalence enabled Henry Small and others to use co-citation as a reliable means of tracing and mapping specialty literatures.35 Referees and editors ought to be asking authors not only whether a traditional key-word search has been performed, but also whether the author's own bibliography has been subjected to citation searches.

Current Awareness

FIGURE 5: RESEARCH ALERT EXAMPLE

Apart from its widespread use for information retrieval and in evaluation research, citation indexing has two other uses that deserve special mention. The first is in selective dissemination of information. Over 25 years ago, Irving Sher and I published the first papers on ASCA (Automatic Subject Citation Alert),36, 37 now called Research Alert®. This system of selective dissemination of information (SDI) involves matching combined reference, keyword, and author profiles of each new paper with a user's search profile of references, terms, and authors. The system enables thousands of searchers to be alerted to new papers that have cited any one or more of the terms in their personal profiles, including their own or related authors' work. It is hard to understand why this type of scientific clipping service is not more widely used. However, variants of SDI profiling have been adopted in many on-line search systems, mainly using keywords, descriptors, or subject headings, as in Medline. The comparable SCI-based system is called SciSearch® and is available on several systems including Dialog, STN, etc.

History of Contemporary Science

A second further use of citation indexing is in writing the history of contemporary science. The Science Citation Index® source material now covers fifty years of the literature from 1945 to the present and thus provides a major tool for the contemporary history of science. There have been about 25 million papers published since the end of World War II, containing at least 250 million cited references. In spite of the huge number of these reference links, the complete citation network can be stored in about 20 gigabytes of computer memory. Such a complete file is not yet available electronically but could be created from ISI's master tapes. The SCI print edition covers 1945 to the present while 1980 to the present is also available on CD-ROM. The on-line version SciSearch is available on DIALOG and STN from 1974 onward. The Social Sciences Citation Index® (SSCI®) is available from 1955 onward in similar print, CD-ROM and on-line editions. The Arts and Humanities Citation Index® begins with the 1975 literature.

Using the full SCI/SSCI/AHCI® files one can trace an uninterrupted path covering fifty years for almost any designated paper. Nevertheless, there is little evidence that scholars conduct such searches. I myself have regularly used a derivative of this huge file to identify putative Citation Classics. This internal ISI file can be used to find the papers most cited for 1945-92 in the SCI/SSCI above a specified threshold. The file is sorted by author or journal.

Citation Classics®

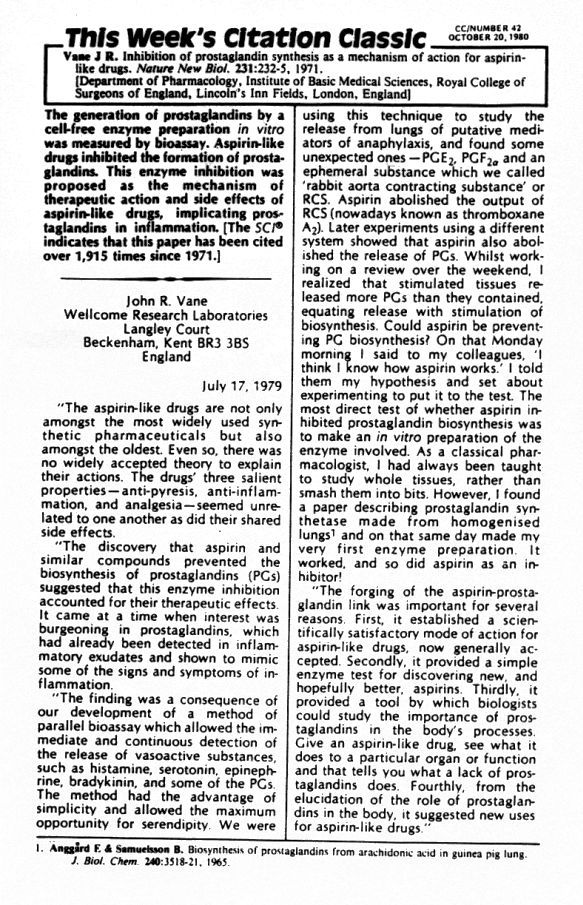

FIGURE 7 : VANE CITATION CLASSIC

Over a fifteen-year period, authors of about 10,000 candidate Citation Classics were asked to comment on their highly cited papers or books.38 About 50% responded and their responses were published in Current Contents®; about 2,000 of these commentaries were reprinted in 1984 in a seven-volume series titled Contemporary Classics in Science.®39 Companario recently used a "sample" of these "most-cited" papers to examine, e.g., patterns of initial rejection by leading journals for papers published elsewhere.40 A major study of women in science also relied on this database.41 It has been somewhat disappointing to see how few journal editors have chosen to use these journal files to identify their landmark papers.42, 43

Perhaps this reluctance by editors is connected to a more general hostility toward and distrust of quantitative methods in peer review. The point of examining lists of most-cited papers is not to claim dogmatically that they are the "best," but rather to make certain that various evaluation processes do not overlook high-impact work that otherwise might be ignored by members of awards or other evaluation committees. It is equally important for such groups to determine why certain papers deemed to be seminal to a field have not been cited at levels one might have expected. Was this due to obliteration by incorporation44, 45 or to other factors in the history of these relatively uncited landmark papers?

Emergence of Scientometrics

A new sub-specialty known as "scientometrics" has developed because of the availability of the ISI citation indexes. Derek de Solla Price was one of the first to recognize the potential of citation analysis for science-policy related studies, and thus helped found scientometrics. His mentor, J.D. Bernal, referred to such studies as the science of science. Countless scientometric analyses have been published as separate papers while many others are simply incorporated into papers that support scientific, scholarly or policy arguments of one kind or another.

There has been much ado about the applicability of citation data, but this varies at the level of aggregation chosen. The national and regional indicators reported by the NSF over a twenty-year period have used SCI data to identify trends of one kind or another.46 Such statistical reports by country or discipline also appear regularly in publications like Science Watch® or Scientometrics. Citation analysis becomes controversial mainly when it is used as a tool in making decisions about funding or the tenure of individuals or groups, especially when it is perceived to be an uninformed use of citation data. Many of these unpublished citation analyses, like most unrefereed work, may, in fact, involve the abuse of SCI data and rightly evoke hostility or unease. After all, some highly published authors are little more than bureaucrats who attach their names to every paper they can. Unless such details are known to the evaluators, citation data could be used to perpetuate unjust distribution of resources. Various forms of inappropriate authorship appear in a recent discussion of scientific misconduct.47

But the opposite may also be true. In several countries where research funding is often highly political, many of the most deserving researchers receive a small fraction of research funds in contrast to parasites who hadn't published a paper for a decade or more. Many well-funded clinical researchers publish in obscure national journals in the local language to hide their lack of international significance. In contrast, younger researchers not only publish in the international journals but are also well cited. Their impact on their scientific fields becomes clearly visible through citation analysis.

Old Boy Network

In science, as in other areas of life, "awards" and elections to academies are usually made by committees, sometimes described as "old boy networks". Unless they are regularly refreshed with new members, they tend to rely on biased human memory in making their selections. Such fossilized groups rarely ask for outside nominations or subject their choices to informed confirmation. The routine use of citation analysis in the award process can ameliorate such situations and should include consideration of appropriate cohort candidates for the innumerable awards in science. The routine use of citation analysis should uncover those individuals who have been inadvertently or otherwise overlooked in the nomination process. And, in some cases, where certain pioneers are selected late for awards, the citation history should demonstrate that their basic work was premature48 -- that many years had elapsed before it was widely recognized.

It is often asserted that citation analysis does not adequately recognize small fields. It is rare indeed for a Nobel Prize or a Lasker, Wolf, or Gairdner award to be awarded in fields so small that the citation impact of the candidates is not above average or otherwise recognizable. To avoid injustice to smaller fields, the SCI files should be sub-divided or categorized as was the case, e.g., in radio astronomy.49, 50 Papers and authors with above-average impact will stand out from others in the cohort. Arno Penzias was not necessarily among the 1,000 most-cited scientists but he was among the most-cited radio astronomers.

Identifying Research Fronts

To use citation data properly, one needs a procedure for identifying the fields, especially small fields. From the earliest days of our work in indexing and classification, it was recognized that the process of field identification is highly problematic. With the pioneering work in 197335 of Small, and Marshakova, 51 we entered the era of algorithmic classification. The use of co-citation analysis for the identification of research fronts made it possible to systematically identify small and large fields. While a research front can emerge from a single seminal work, normally two or more of core papers are involved in the identification of new fronts.34

FIGURE

8: CO-CITATION OF CORE PAPERS FOR "PROSTAGLANDINS AND INFLAMATION."

While co-citation analysis has been used systematically by ISI to identify en masse thousands of research fronts each year, a similar procedure called co-citation mapping can also be used to create ad-hoc clusters maps. In short, one can construct the map of core papers for any specialty by establishing the citation linkages between groups of papers associated with any individual or group under consideration. Indeed, it might be argued that unless one has actually or implicitly created the map of an individual's specialty, one cannot say with assurance whose role was primordial. Such maps can be created to cover short or long periods. It would be an anomalous situation if a deserving scholar's work did not to turn up as one of the key nodes on the map of his or her field.Indeed, such key links need not be based on high citation frequency. As Sher, Torpie, and I demonstrated over 30 years ago, careful citation mapping leads to the uncovering of small but important historical links overlooked by even the most diligent scholars.52

Henry Small's SciMap software is now routinely used to create these maps for small databases extracted from the ISI indicators files for 1981-95.

FIGURE

9: SCIMAP OF PROSTAGLANDIN SYNTHASE

Uncitedness

A frequent topic, given prominence in 1990 by a reporter for Science,53 is that of uncitedness; that is, failure of publications to be cited at all, or rarely. The truth is that we know too little about uncitedness. Hamilton53 garbled some unpublished data he was given without recognizing the details to be worked out. He used these data to support his preconceived notions about the alleged lack of utility of large areas of scholarly research. Pendlebury published a note in Science which attempted to correct the false impression created,54 but like so many other published errors, Hamilton's report continues to be cited while Pendlebury's "correction" is mainly overlooked.Regardless of what is done about the selective funding of research, there will always be skewed distribution in citation frequency. The vast majority of published papers will always remain infrequently cited. These Pareto- or Lotka- type distributions of citation "wealth" are inherent in the communication process so well described by Derek Price.55 As a result, there are inevitable discontinuities in scholarship. A small percentage of the large mass of poorly cited material may include some work that can be described as "premature" in the sense of being valid and important but not recognized.48 It should be possible to systematically reexamine such uncited works even decades later. Presumably some have served as useful stepping stones in the evolution of a particular field. Editors can easily identify such papers and reconsider them in light of changes in the field. While it would be a daunting task to re-evaluate all previously published manuscripts for this purpose, serious thought should be given to this task. What would happen to these papers were they resubmitted in light of the changes in the past decade or two?

More than likely, many uncited papers involve supersedure. Publication of research is a cumulative process. Each new laboratory report by established investigators builds on and/or supersedes their own earlier work. As the work progresses, it is not necessary to cite all the earlier reports. It is not unusual to observe that after a decade of research, the entire corpus is superseded by a "review" which is preferentially cited by subsequent investigators.

Hamilton also ignored the more prominent fact about uncitedness; among the leading research journals of the world it is, to all intents and purposes, non-existent. The following tables provide data on cumulative citation counts for the average paper in each of the most influential scientific journals.

FIGURE 10: CUMULATIVE

IMPACT OF 1981 ARTICLES AND UNCITEDNESS

CUMULATIVE IMPACT

AND UNCITEDNESS

1981 ARTICLES CITED

1981-93

1981 Articles 1981

1981 1981

Av Cum Cites Cited

% Total

1981-93 Items Uncited

Cites

Rank Journal # Items

(All) (Cited)

____________________________________________________________________________

|

|

Cell |

393

|

126

|

126

|

0

|

49,307

|

|

|

NEJM |

378

|

116

|

117

|

1.06

|

43,784

|

|

|

J Exp Med |

343

|

89

|

90

|

0.29

|

30,630

|

|

|

PNAS (Biol) |

1550

|

86

|

86

|

0.13

|

133,135

|

|

|

J Cell Biol |

367

|

81

|

81

|

0

|

29,629

|

|

|

Arch Gen Psych |

152

|

79

|

80

|

1.31

|

11,970

|

|

|

J Clin Invest |

418

|

77

|

78

|

0.48

|

32,226

|

|

|

Nature |

1375

|

71

|

73

|

2.76

|

96,881

|

|

|

J Neurosci |

106

|

70

|

70

|

0

|

7,432

|

|

|

Science |

1077

|

61

|

64

|

4.36

|

65,831

|

|

|

J Mol Biol |

307

|

61

|

61

|

0.99

|

18,629

|

|

|

J Immunol |

989

|

55

|

55

|

0.2

|

54,380

|

|

|

Circulation |

416

|

54

|

55

|

1.92

|

22,601

|

|

|

Circulation Res |

267

|

54

|

54

|

0

|

14,439

|

|

|

Ann Int Med |

290

|

54

|

55

|

2.1

|

15,528

|

|

|

Blood |

360

|

53

|

53

|

0

|

18,983

|

|

|

Lancet |

641a

|

52

|

69

|

25.6

|

33056

|

|

|

JBC |

2220

|

49

|

49

|

0.59

|

108,107

|

|

|

Gastroenterology |

325

|

47

|

48

|

1.23

|

15,408

|

|

|

Mol Cell Biol |

122

|

47

|

47

|

0.81

|

5,713

|

|

|

Phys Rev L |

992

|

43

|

43

|

0.4

|

42,463

|

|

|

Syst Zool |

34

|

43

|

44

|

2.86

|

1,455

|

|

|

Am J Pathol |

167

|

42

|

43

|

1.19

|

7,053

|

|

|

Eur J Immunol |

171

|

42

|

43

|

2.33

|

7,156

|

|

|

Cancer Res |

851

|

37

|

37

|

0.59

|

31,245

|

|

|

Ann Neurol |

222

|

37

|

38

|

2.69

|

8,131

|

|

|

Lab Invest |

139

|

36

|

36

|

0.71

|

4,952

|

|

|

J Virology |

483

|

32

|

33

|

0.83

|

15,558

|

|

|

J Natl Canc I |

306

|

31

|

31

|

0.65

|

9375

|

|

|

Arthritis Rheum |

204

|

27

|

28

|

2.44

|

5,577

|

|

|

Am J Hum Genet |

78

|

24

|

25

|

1.27

|

1,896

|

|

|

Angew Chem |

413

|

21

|

22

|

2.66

|

8,664

|

|

|

JAMA |

551

|

21

|

23

|

9.2

|

11,382

|

a - Includes "Notes"

For each journal we have determined the percentage of uncited papers. As we see, well over 90-95% of this literature is well cited. The cumulative citation frequency for these journals is indeed startling. By definition, as one reaches down into the many smaller and lower impact journals, the percentage of uncitedness increases.FIGURE 11: CUMULATIVE IMPACTS FOR ANNUAL REVIEW OF BIOCHEMISTRYOther important facets of low-frequency citation would be the consequence of an obliteration by incorporation (OBI) into review articles.44,45 There are hundreds of review journals which incorporate into their coverage thousands of stepping-stone papers which form the building blocks of scientific knowledge. Consider, e.g., that the Annual Review of Biochemistry published approximately 250 papers over the decade 1981-90. These reviews contained about 40,000 cited references, but more importantly, each review itself has been cited in over 300 subsequent papers. This is detailed in Figure 2.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

FIGURE 12: CUMULATIVE IMPACT FOR 1981-6 REVIEW JOURNALS ARTICLES FROM 1981-94

CUMULATIVE IMPACT

1981-94

Review Journal

Articles

Published 1981-6

6-Year Total Total

JOURNAL Average

Citations Source

______________________________________________

| ANN R BIOCH |

320.31

|

60219

|

188

|

| ANN R CELL |

231.35

|

7866

|

34

|

| ADV PROTEIN |

205.29

|

4311

|

21

|

| ANN R IMMUN |

194.59

|

16929

|

87

|

| PHYSIOL REV |

176.53

|

22066

|

125

|

| REV M PHYS |

167.85

|

21821

|

130

|

| ADV IMMUNOL |

159.19

|

6686

|

42

|

| MICROBIOL R |

145.28

|

18015

|

124

|

| ANN R NEUR |

141.6

|

13877

|

98

|

| PHARM REV |

138.26

|

10646

|

77

|

| ANN R PLANT |

138.22

|

17139

|

124

|

| ANN R GENET |

131.99

|

13199

|

100

|

| ENDOCR REV |

128.49

|

17731

|

138

|

| ADV PHYSICS |

108.93

|

6318

|

58

|

| ANN R PHARM |

107.59

|

15493

|

144

|

| ADV CARB C |

96.8

|

3388

|

35

|

| ANN R ASTRO |

94.43

|

8782

|

93

|

| ADV O RG M ET |

90.62

|

3534

|

39

|

| CHEM REV |

90.54

|

13853

|

153

|

| BlRAIN RES R |

89.74

|

9602

|

107

|

| ANN R PH CH |

85.64

|

10277

|

120

|

| IM.MUNOL REV- |

80.12

|

22753

|

284

|

| Q REV BIOPH |

77.37

|

3946

|

51

|

| REV PHYS B |

76.94

|

3847

|

50

|

| ANN R PHYSL |

75.35

|

20722

|

275

|

| PROG NUCL |

74.16

|

2373

|

32

|

| PSYCHOL REV |

73.71

|

11646

|

158

|

| CRC C R Bl |

72.47

|

7754

|

107

|

| AN N R ECO L |

70.42

|

8239

|

117

|

| REC PROG H |

70.05

|

4273

|

61

|

| ANN R MICRO |

68.73

|

9829

|

143

|

| ACC CHEM RE |

68.44

|

23887

|

349

|

| ANN R BIOPH |

65.31

|

7184

|

110

|

| ADV ENZYM |

56.98

|

2963

|

52

|

| EPIDEMIOL R |

53.32

|

3039

|

57

|

| PHYS REPO RT |

52.06

|

24259

|

466

|

| PROG NEUROB |

50.34

|

5789

|

115

|

After the 1979 publication of my book on Citation Indexing,31 an excellent review of the literature was published in 1981 by Linda Smith.56 Then in 1984 Blaise Cronin published The Citation Process, an excellent account of citation behavior and related issues.57 Subsequently, White and McCain,58 and Cozzens59 provided additional reviews.EG/sm

Conclusion

It is commonplace to speak about the isolation of the cultures of science and social science. Most practicing scientists seem completely oblivious to the large literature of citation and bibliometric studies. This synoptic review has only touched the highlights.

From the perspective of the social scientist or humanities scholar, the failure to include monographs as sources in the ISI citation indexes may be a drawback in drawing conclusions about the impact of certain work.60 Nevertheless, the inclusion of books as cited references in ISI's citation indexes has permitted studies of most-cited books to be accepted as reasonable surrogates for more comprehensive studies that might have included books as sources. Undoubtedly, the creation of a Book Citation Index is a major challenge for the future and would be an expected by-product of the new electronic media with hypertext capability!

Future citation index databases will include the all-author feature so frequently missed by those who use the present generation of citation indexes. This capability is already built into the ISI Research Indicators database for 1981-95. Back in 1963 Michael Kessler introduced the notion of bibliographic coupling,61 that is, retrieval of related papers, by examining the cited references they share in common. This capability awaited the implementation of large computer memories. It is now available in the form of "related records" in the SCI CD-ROM editions. This feature enables researchers to navigate (hypersearch) from one document to another in real time. Combined with access to full texts of papers on-line in the near future, the navigational and retrieval capabilities of citation links will finally come into full bloom. Citation analysts will then have at their disposal citations in context so that quantitative data can be augmented in real time with qualitative statements about the works being cited.

Let me conclude by quoting a recent page by Jeff Biddle at Michigan State University:

"Citation analysis is one potentially useful tool for the researcher interested in the history of twentieth-century economics and will be most useful when used in conjunction with the more traditional methods relied upon by historians.... [citation analysis can be compared to] playing the role of a fallible witness. The historian who relies only on the testimony of the citation record is risking serious error, but the historian who fails to make use of it may be bypassing a valuable source of information."

Bibliography

2. back to text Le Pair, C. "Formal Evaluation Methods: Their Utility and Limitations," International Forum on Information and Documentation 20(2): 16-24 (October, 1995).

3. back to text Garfield, E. "Calling Attention to Chauncey D. Leake -- Renaissance Scholar Extraordinary," Current Contents, April 22, 1970, reprinted in Essays of an Information Scientist, Volume 1, pages 102(1977).

4. back to text Garfield, E. "To Remember Chauncey D. Leake," Current Contents, February 13, 1978, pages 411-421 (1980).

5. back to text Adair, W.C. "Citation Indexes for Scientific Literature?" American Documentation 6:31-32 (1955).

6. back to text Garfield E., "Citation Indexes for Science : A New Dimension in Documentation Through Association of Ideas," Science, 122:108-111 (1955).

7. back to text Garfield, E. "A Unified Index to Science," Proc. Intl. Conf. Sci. Info. 1958, Volume 1, 461-74 (1959).

8. back to text Garfield, E. "Citation Analysis as a Tool in Journal Evaluation," Science 178:471-79 (1972).

9. back to text Garfield, E. "Significant Journals of Science," Nature 264:689-15 (1976)

10. back to text Virgo, J. "A Statistical Procedure for Evaluating the Importance of Scientific Papers," Library Quarterly 47:415-30, 1977.

11. back to text Small H., "A Co-Citation Model of a Scientific Specialty: A Longitudinal Study of Collagen Research," Social Studies of Science, 7:139-166 (1977).

12. back to text Koening, M. E. D. "A Bibliometric Analysis of Pharmaceutical Research," Research Policy 12(1):15-36 (1983)

13. back to text Koenig, M. E. "Bibliometric Indicators Versus Expert Opinion in Assessing Research Performance, Journal of the American Society for Information Science 34(2):136-145 (1983).

14. back to text Hagstrom, W. O. "Inputs, Outputs and the Prestige of American University Science Departments," Sociology of Education 44:375-9 (1971).

15. back to text Nicolini, C., Vakula, S., Italo Balla, M., & Gandini, E. "Can the Assignment of University Chairs be Automated?" Scientometrics 32(2) 93-107 (1995)

16. back to text Spencer, C. C. "Subject Searching the Science Citation Index. Preparation of a Drug Bibliography using Chemical Abstracts, Index Medicus, and Science Citation Index," American Documentation 18:87-96 (1967).

17. back to text Pao, M. L. "Term and Citation Retrieval: A Field Study," Information Processing and Management 29(1):95-112 (1993)

18. back to text Kaplan, N. "The Norms of Citation Behavior: Prolegomena to the Footnote." American Documentation 16:179-84 (1965)

19. back to text Margolis, J. "Citation Indexing and Evaluation of Scientific Papers," Science 155: 123-9 (1967).

20. back to text Garfield, E. "Citation Indexes for Science," Science 144:649-54 (1964).

21. back to text Steinbach, H.B. "The Quest for Certainty: Science Citation Index," Science 145: 142-3 (1967).

22. back to text Farber, E. Personal Communication.

23. back to text Small, H. G. "Cited Documents as Concept Symbols," Social Studies of Science 8:327-40 (1978)

24. back to text Garfield, E. "How to Use Science Citation Index (SCI)," Current Contents, February 28, 1983, reprinted in Essays of an Information Scientist, Volume 6, pgs 53-62 (1984).

back to text Garfield, E. "Announcing the SCI Compact Disc Edition: CD-ROM Gigabyte Storage Technology, Novel Software, and Bibliographic Coupling Make Desktop Research and Discovery a Reality," Current Contents, May 30, 1988, reprinted in Essays of an Information Scientist, Volume 11, pgs. 160-170 (1990)

25. back to text Garfield, E. "The Permuterm Subject Index: An Autobiographical Review," Journal of the American Society of Information Science 27:288-91 (1976).

26. back to text Garfield, E. "New Tools for Navigating the Materials Science Literature," Journal of Materials Education 16: 327-362 (1994)

27. back to text Martyn, J. "Unintentional Duplication of Research," New Scientist 377:388 (1964).

28. back to text La Follette, M. C. "Avoiding Plagiarism: Some Thoughts on Use, Attribution, and Acknowledgement," Journal of Information Ethics 3(2) 25-35 (Fall, 1994).

29. back to text Garfield, E. "When to Cite," Library Quarterly 66(4): 449-458 (October, 1996).

31. back to text Garfield, E. Citation Indexing -- Its Theory and Application in Science, Technology and Humanities, ISI Press, 1979, 274 pgs.

32. back to text Garfield, E. "Using the Science Citation Index to Avoid Unwitting Duplication of Research," Current Contents, July 21, 1971, reprinted in Essays of an Information Scientist, Volume 1, pages 219-20 (1977).

33. back to text Mazur, R. H., Ellis, B. W., and Cammarata, P.S. "Correction Note," Journal of Biological Chemistry 237:3315 (1962).

34. back to text Schwartz, D. P., and Pallansch, M. J. "Tertbutyl Hypochloride for Detection of Nitrogenous Compounds on Chromatograms, " Analytical Chemistry 30:219 (1958).

35. back to text Small, H. "Co-Citation in the Scientific Literature: A New Measure of the Relationship Between Two Documents," Journal of the American Society for Information Science 24:265-9 (1973).

36. back to text Garfield,E. and Sher, I.H. "ASCA (Automatic Subject Citation Alert) -- a New Personalized Current Awareness Service for Scientists," American Behavioral Scientist 10:29-32 (1967).

37. back to text Garfield, E. and Sher, I. "ISI®'s experience with ASCA -- A Selective Dissemination System," Journal of Chemical Documentation 7:147-153 (1967).

38. back to text Garfield, E. "Citation Classics -- From Obliteration to Immortality -- And the Role of Autobiography in Reporting the Realities Behind High Impact Research," Current Contents, November 8, 1993, reprinted in Essays of an Information Scientist, Volume 15, pages 387-391 (1993)

39. back to text Garfield, E. "Contemporary Classics in the Life Sciences: An Autobiographical Feast, " Current Contents November 11, 1985, reprinted in Essays of an Information Scientist, Volume 8, pgs 410-5 (1985)

40. back to text Companario, J. M., "Consolation for the Scientist: Sometimes It Is Hard to Publish Papers That Are Later Highly Cited," Social Studies of Science, 23:347-62 (1993).

41. back to text Astin, H.S. "Citation Classics: Women's and Men's Perceptions of Their Contributions to Science," in Zuckerman, H., Cole, J.R., and Bruer, T, eds., The Outer Circle: Women in the Scientific Community. N.Y: Norton (1991) p. 57-70.

42. back to text Lundberg, G.D. "Landmarks," JAMA Journal of the American Medical Association 252:812, 1984.

43.

back

to text Garfield, E. "Citation Classics from JAMA:

How to Choose Landmark Papers When your Memory Needs Jogging," Current

Contents, August 3, 1987 and Essays, Volume 10, p.

205-17 (includes Garfield, E. "100 Classics from JAMA,"

JAMA 257:529(1987).

44. back to text Merton, R. K. Social Theory and Social Structurepp 27-29, 35-38, NY: Free Press (1968) 702 pgs.

45. back to text Garfield, E. "The 'Obliteration Phenomenon' in Science -- and the Advantage of Being Obliterated!" Current Contents No. 51/52, December 22, 1975, reprinted in Essays of an Information Scientist, Volume 2, pp 396-398 (1977).

46. back to text National Science Board, Science & Engineering Indicators - 1993. Washington, DC: U.S. Government Printing Office (1993), 514 pages.

47. back to text See special editions of the Journal of Information Ethics, edited by R. Hauptmann, Volume 3 (Nos. 1 & 2) (Spring & Fall, 1994).

48. back to text Stent, G. S. "Prematurity and Uniqueness in Scientific Discovery," Scientific American 227:84-93 (1972).

49. back to text Garfield, E., "Are the 1979 Prizewinners of Nobel Class?" Current Contents, September 22, 1980, reprinted in Essays of an Information Scientist, Volume 4. pgs 609-617 (1980)

50. back to text Garfield, E. & Welljams-Dorof, A. "Of Nobel Class: A Citation Perspective on High Impact Research Authors," Theoretical Medicine 13:117-35 (1992).

51. back to text Marshakova, I.V. "System of Document Connections Based on References," Nauch-Tekn. Inform. Ser 2 SSR 6:3-8 (1973).

52.back to text Garfield, E., Sher, I. H., and Torpie, R. J. "The Use of Citation Data in Writing the History of Science." (1964) 76pp. ISI.

53. back to text Hamilton, D. P. "Publishing by -- and for? -- the numbers," Science 250:1331-2 (December 7, 1990).

54. back to text Pendlebury, D. A. "Science, Citation, and Funding," Science 251:1410-11 (March 22, 1991).

55. back to text Price, D. J. deS. Little Science, Big Science...and Beyond, Columbia University Press, 1986.

56 back to text Smith, L. C. "Citation Analysis," Library Trends 30:83-106 (1981)

57. back to text Cronin, B. The Citation Process, Taylor Graham, London (1984) 103pgs.

58. back to text White, H. D. & McCain, K. W. "Bibliometrics," Annual Review of Information Science and Technology 24: 119-186 (1989).

59. back to text Cozzens, S. E. "What Do Citations Count - The Rhetoric-1st Model," Scientometrics 15: 437-447 (1989).

60. back to text Biddle, J. "A Citation Analysis of the Sources and Extent of Wesley Mitchell's Reputation," History of Political Economy 28(2): 137-169 (1996).

61.

back

to text Kessler, M. M. "Bibliographic Coupling

Between Scientific Papers," American Documentation 14:10-25

(1963)

20.

back

to text Garfield, E. "Citation Indexes for Science,"

Science

144:649-54 (1964).

20.

back

to text Garfield, E. "Citation Indexes for Science,"

Science

144:649-54 (1964).