Impact factors are widely used to rank and evaluate journals. They also often used inappropriately as surrogates in evaluation exercises. The inventor of the Science Citation Index warns against the indiscriminate use of these data. Fourteen year cumulative impact data for 10 leading medical journals provide a quantitative indicator of their long term influence. In the final analysis, impact simply reflects the ability of journals and editors to attract the best papers available.

Counting references to rank the use of scientific journals was reported as early as 1927 by Gross and Gross.1 In 1955 I suggested that reference counting could measure "impact,"2 but the term "impact factor" was not used until the publication of the 1961 Science Citation Index (SCI) in 1963. This led to a byproduct, Journal Citation Reports (JCR) and a burgeoning literature using bibliometric measures. From 1975 to 1989, CR appeared as supplementary volumes in the annual SCI. From 1990-4, they have appeared in microfiche, and in 1995 a CD ROM edition was launched.

The most used data in the JCR are impact factors— ratios obtained from dividing citations received in one year by papers published in the two previous years. Thus, the 1995 impact factor counts the citations in 1995 journal issues to "items" published in 1993 and 1994. I say "items" advisedly. There are a dozen major categories of editorial matter. JCR's impact calculations are based on original research and review articles, as well as notes. Letters of the type published in the BMJ and the Lancet are not included in the publication count. The vast majority of research journals do not have such extensive correspondence sections. The effects of these differences in calculating journal impact can be considerable.3,4

The ubiquitous and sometimes misplaced use of journal impact factors for evaluation has caused considerable controversy. They are probably the most widely used of a all citation based measures. They were invented to permit

reasonable comparison between large and small preferentially journals. Absolute citation counts preferentially give highest rank to the largest or the oldest journals. For example, in 1994, articles published in the BMJ regardless of age were cited 37 600 times. Of these, 5800 citations— -- about 15%— were to items published in 1992 and 1993.

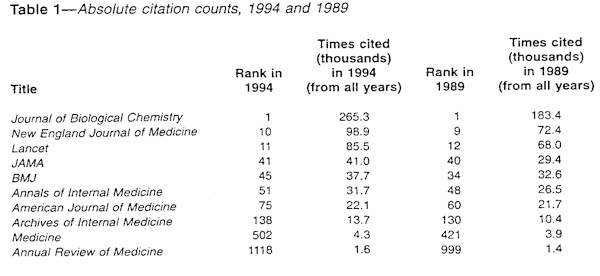

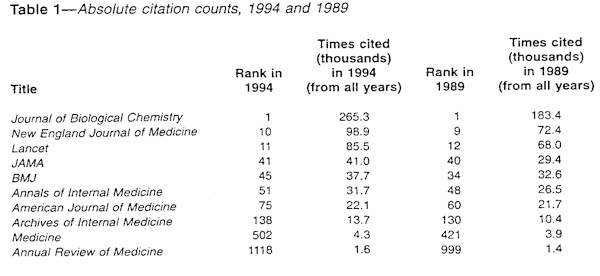

Table 1 shows absolute citation counts for nine English language clinical journals as well as the Journal of Biological Chemistry, which is included to emphasise the difference between absolute and relative citation of large journals Table 2 lists these same journals ranked by 1994 impact It is important to note that of the thousands of journals published and cited in SCI only 337 achieved a current impact higher than 3.0. The SCI processes over 3300 source journals and cites thousands more, but it does no t include as sources hundreds of low impact applied and clinical journals. This has been a source of frustration to editors from the Third World. They often ask-how they can improve impact so as to warrant inclusion.

It should be apparent from reviewing the two tables that the ranking relationship between quality and citation is not absolute. The Journal of Biological Chemistry is one of the most cited journals in the history of science but it is also among the largest. Publishing over 4000 research manuscripts a year (as do other high volume journals like Physical Review) inevitably leads to considerable variation in quality and impact of individual articles. As a consequence, while these journals publish many papers which become "citation classics," their current impact may not be as high as some smaller journals, especially certain review journals. Indeed, one of the highest impact journals is Annual Review of Biochemistry with a current impact of 42.2. But as the data for Annual Review of Medicine show, this is not an absolute rule. In general, a journal is well advised to publish authoritative review articles if it would increase its impact. Since there are over 40 000 review articles published each year, not all will achieve high impact. Again, selection of reviews about active research fronts is important, as is their timing. Controversial topics may increase impact. A non-medical example is "cold fusion" by Fleischman and Pons,5 which has been cited over 500 times. A recent medical example could be the "Concorde study," already cited in 150 papers.6 But hundreds of halfbaked controversial ideas are essentially ignored.

|

It is widely believed that method papers are cited more than the average and thus increase journal impact. How lovely it would be if every method proved to be another Lowrey method,8 cited over 8000 times in 1994 and over 250 000 in its lifetime. But the fact is that method journals do not achieve extraordinary impact since the vast majority of their papers, like clinical tests, are not unusual.

|

An editor could select authors on the basis of past performance. By checking their citation histories, one could undoubted increase the probability of publishing papers with higher potential impact. Some editors do this instinctively, especially when publishing the first few issues of a new specialty journal. in most cases, the most-cited papers for newer journals appear in the first

Over the years, the increase in multiauthored papers has been apparent. This is matched by an increase in multinational and multi-institutional clinical and epidemiological studies. At the institute for Scientific Information (ISI), unpublished studies support the notion that these papers produce greater impact. ISI's Science Watch has regularly reported on the most-cited current papers in medicine. There is fierce competition among editors to publish these "hot papers." These undoubtedly contribute to increased current impact. But what about long term impact?

In spite of dozens of presentations by myself and others, there continues to be a certain mystique about journal selection for Current Contents, the Science Citation Index, and the Social Sciences Citation Index. My 1990 essay in Current Contents is still sent to those making such enquiries.10

Of the 4500 journals covered by SCI and SSCI, probably 3000 can be described as biomedically related. Of these, 500 account for 50% of what is published and 75% of what is cited. Of the 3300 covered in Medline, hundreds of low impact journals are not included by ISI for similar reasons -space and economics.

New journals continue to appear each year and must be evaluated as early as possible. But even Nature in its periodic reviews of new journals requires the passage of time before accepting journals to be reviewed. An experienced evaluator takes into account timing, format, subject matter, past performance, and other indicators such as internationally. The first issue of many journals is full of hope, but they soon exhaust the backlog of material needed to insure continued, timely publication. Clearly a society publisher with years of experience does not launch a journal without a long term commitment— -- and its editorial standards will be well known. Inexperienced publishers often do not live up to their rosy expectations. The inclusion of abstracts and complete author, street, and email addresses are but a few artefacts that are factored in the judgment of minimum quality. ISI may also ask how well a particular specialty or country is represented in its coverage. And, after a few years of history, all other criteria being equal, one can look at a journal's impact.

This article has focused on journal impact factors and their role in what Stephen Lock described as "journalology."11 Like individual authors, a variety of indicators can be used to judge journals in a current or historical sense. Impact numbers are probably less important than the rankings which are obtained. Often there are only slight quantitative differences involved. The literature is replete with recommendations for corrective factors that should be considered, but in the final analysis subjective peer judgment is essential.

back to text2.Garfield E. Citation indexes for science: a new dimension in documentation through association of ideas. Science 1955,122:108-111.

back to text3. Garfield E. Which medical journals have the greatest impact? Ann Intern Med 1986;105:313-20.

back to text4. Garfield E. Why are the impacts of the leading medical journals so similar and yet so different? Item-by-item audits reveal a diversity of editorial material. In: Essays of an information scientist Vol 10. Philadelphia: ISI Press, 1987:7-13.

back to text5. Fleischmann M, Pons S. Hawkins M. Electrochemically induced nuclear fusion of deuterium. Journal of Electroanalysis Chemistry Interface 1989:261:301-8.

back to text6.Aboulker JP, Smart AM. Preliminary analysis of the Concorde trial. Lancet 1 993,341:889-90.

back to text7. Marshall BJ, Warren JR. Unidentified curved bacilli in the stomach of patients with gastritis and peptic ulceration. Lancer 1 984;i: 1311-5.

back to text8.Lowry OH, Rosebrough NJ, Farr AL, Randall RJ. Protein measurement with the folin phenol reagent. Journal of Biological Chemistry 1951,193 265-75.

back to text9.Van Trigs AM, de Jong-van den Berg LT, Voogt LM, Willems J. Tromp TF, Haaijer-Ruskamp FM. Setting the agenda: does the medical literature set the agenda for articles about medicines m the newspapers? Soc Sci Mid 1995;41:893-9.

back to text10. Garfield E. How ISI selects journals for coverage: quantitative Ad qualitative considerations In: Essays of an information scientist. Vol 13. Philadelphia: ISI Press, 1990:185-93.

back to text11. Lock SP. "Journalology": are the quotes needed? In: Garfield E. Essays of an information scientist Vol 13. Philadelphia: ISI Press, 1990:19-24.

back to text12. Garfield E. How to use citation analysis for faculty evaluations, and when is K relevant? Pares I and 2. In: Essays of an information scientist Vol 6. Philadelphia: ISI Press, 1984:354-72.

(Accepted 17May 1996)